Paxos v2 and Lightweight Transactions in Apache Cassandra®

By Johnny Miller

TLDR

Lightweight Transactions (LWTs) in Apache Cassandra are a powerful tool for ensuring linearizable consistency in distributed environments, which is essential for preventing concurrent overwrites and ensuring unique entries. As we explore in this blog, LWTs rely on the Paxos consensus protocol, which has a new implementation, Paxos v2, available to use in Cassandra 4.1+. This upgrade brings significant improvements in performance, reduced WAN communications, and new repair requirements. However, it's still important to use LWTs judiciously and not as a default approach for all Cassandra data updates. By understanding the benefits and limitations of LWTs, and following the necessary configuration steps such as setting the paxos_variant to v2 and adjusting application consistency levels to ANY, you can leverage LWTs more efficiently to address specific use cases without compromising Cassandra's high availability and scalability. And, as we look to the future, the upcoming integration of the Accord protocol for ACID transactions in 5.1 promises to further expand the capabilities of Apache Cassandra.

Introduction

Cassandra is a powerful distributed database that excels in high availability and partition tolerance, making it a popular choice for applications requiring rapid data ingestion, high availability, horizontal scalability and multi-DC/Region deployments. However, its Last-Write-Wins (LWW) conflict resolution strategy can lead to problems in scenarios involving concurrent writes. To address this, Cassandra offers Lightweight Transactions (LWTs), which ensure linearizable consistency akin to serializable transactions in traditional databases.

This blog delves into the mechanics of LWTs and the recent upgrade to its Paxos implementation in Cassandra 4.1, which underpins LWTs, with enhanced performance and reliability. We explore how LWTs prevent issues like concurrent overwrites, ensuring unique entries in multi-client environments. Additionally, we discuss the operational considerations of enabling Paxos v2, highlighting its benefits. As Cassandra evolves towards supporting ACID transactions with the ongoing implementation of CEP-15: General Purpose Transactions in the Cassandra project, understanding these features becomes crucial for developers aiming to leverage Cassandra's full potential in distributed systems.

LWTs in Cassandra

Cassandra is a distributed database that prioritises availability and partition tolerance. It uses a Last-Write-Wins (LWW) mechanism to resolve conflicts, where the most recent write (based on timestamp) is considered the correct version of data. This approach provides considerable benefits that enhance the database's performance and availability such as:

- High Availability and Fault Tolerance: LWW ensures that data is always accessible, even if some nodes are down or partitioned. This is because the strategy relies on timestamps to resolve conflicts, allowing the most recent write to prevail regardless of the order of arrival.

- Simplified Conflict Resolution: LWW simplifies conflict resolution by automatically choosing the most recent write based on timestamps. This approach eliminates the need for complex locking mechanisms or transactional isolation, making it faster and more efficient for high-throughput applications.

- Scalability and Performance: LWW supports high scalability and performance by allowing concurrent writes without the overhead of strict consistency checks. This makes Cassandra particularly suitable for applications that require rapid data ingestion and high availability.

- Flexibility and Adaptability: The use of LWW allows Cassandra to accommodate different types of data and queries, providing a flexible data model that can adapt to various application needs.

LWW relies on accurate timestamps to resolve conflicts in Cassandra. That's why it's crucial to keep time synchronised across your Cassandra database and applications. If clocks are out of sync, older data might be mistakenly considered the most recent, leading to inconsistencies. To prevent this, use tools like NTP and Chrony to keep clocks in sync. Additionally, use AxonOps to monitor and alert on time synchronisation issues, ensuring your timestamps are accurate and consistent across the cluster.

However, LWW also has limitations, such as potential data loss or inconsistency due to the reliance on timestamps and the possibility of concurrent writes overwriting each other. To provide a solution to this, Cassandra offers Lightweight Transactions (LWTs).

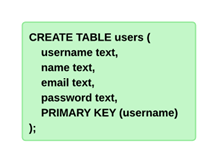

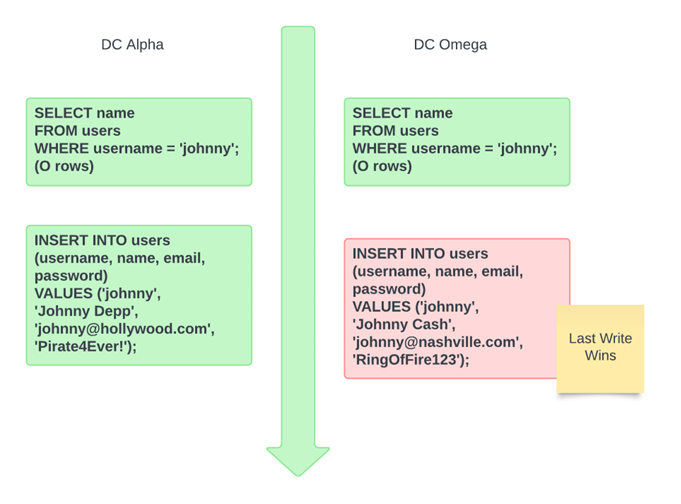

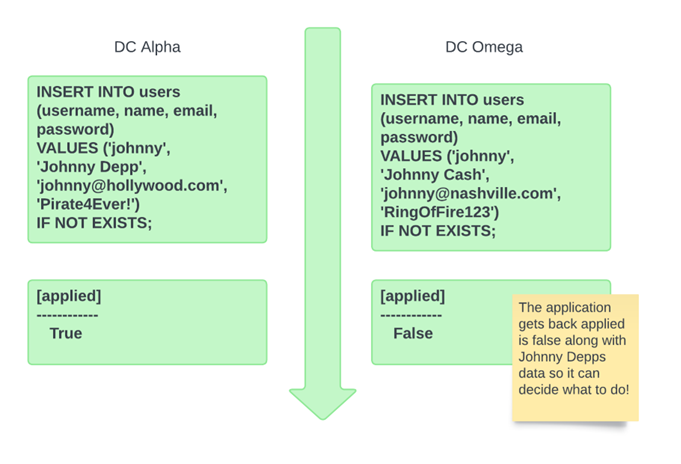

Example Problem using LWTs solves

Consider a scenario where two users named Johnny Depp and Johnny Cash are trying to sign up for a website using the username "johnny." Johnny Depp is interacting with Cassandra in Data Center Alpha, while Johnny Cash is in Data Center Omega. Both users check if the username "johnny" is available by querying the users table. Since neither finds an existing entry for "johnny," they both proceed to insert this username into the database. In a typical Last Write Wins (LWW) scenario, whichever user's write operation reaches the database last would overwrite the previous one, potentially leading to data inconsistency and an upset Johnny Depp. In the example below, Johnny Cash wins as his insert has overwritten Johnny Depp’s account details.

This approach can lead to issues in scenarios where concurrent writes need coordination, such as ensuring unique entries or preventing overwrites in multi-client environments.

LWTs were introduced to provide a mechanism for achieving linearizable consistency, akin to serializable transactions in traditional databases. This is crucial for operations where multiple clients might attempt to modify the same piece of data simultaneously, such as creating unique user accounts or updating inventory counts.

If a LWT was used in the same scenario (see below), Johnny Depp would get back applied true and Johnny Cash would get back an error saying applied is false. Then he can decide on a new username without overwriting the existing data.

This LWT statement checks if a row in the users table with the same primary key (username) value (johnny) already exists before inserting the data using the clause IF NOT EXISTS. If it doesn't exist, Johnny Depp’s registration succeeds. At the same time, when Johnny Cash tries to register with the same username using the IF NOT EXISTS clause, it ensures that only one of these transactions can succeed. As a result, Johnny Cash receives an error indicating that the username has already been taken, along with the existing data preventing the overwrite. It's worth noting that the DB user your app uses to interact with the DB will need both write and read permissions if using LWTs due to the existing data being returned when applied is false.

Examples of Using LWT in CQL

LWTs can be utilised in several ways via CQL:

Conditional Insert

INSERT INTO users (id, name) VALUES (1, 'Alice') IF NOT EXISTS;

This ensures that Alice is inserted only if her entry does not already exist.

Conditional Update

UPDATE users SET name = 'AliceUpdated' WHERE id = 1 IF name = 'Alice';

This updates Alice's name if the current name is Alice, ensuring atomicity.

LWT in a Batch

BEGIN BATCH

INSERT INTO users (id, name) VALUES (2, 'Bob') IF NOT EXISTS;

UPDATE accounts SET balance = balance - 50 WHERE id = 1 IF balance >= 50;

INSERT INTO audit_logs (id, description) VALUES (now(), 'Created Bob');

APPLY BATCH;

This batch operation ensures both inserts and updates are done atomically across the tables users, accounts and audit_logs. If one of the LWTs fails, then the batch statement will not be applied.

Delete Conditional

DELETE FROM users WHERE id = 1 IF EXISTS;

This deletes the user entry only if it exists.

Word of Caution on using LWTs

While LWTs may seem like a silver bullet, it's essential to use them only when you need them. The temptation to use them for all Cassandra updates might be strong, but it's crucial to resist this urge and understand the trade-offs you are making by leveraging this feature.

LWTs rely on the Paxos consensus protocol to ensure that data is consistent across all nodes in your cluster. While this provides strong guarantees, it comes at a cost. The protocol requires multiple round trips between nodes, which can slow down your application and put extra load on your cluster. In fact, with Paxos v1 implementation, each LWT transaction involves four round trips, sometimes cross-DC, which can lead to timeouts and decreased performance.

So, when should you use LWTs? Reserve them for situations where strict consistency is crucial, such as preventing concurrent overwrites or ensuring unique entries. By using LWTs only when necessary, you can avoid performance bottlenecks and keep your application running smoothly.

What is Paxos?

In the world of computer science, consensus algorithms are the unsung heroes that keep distributed systems running smoothly. These algorithms are essential for ensuring that multiple nodes in a system can agree on a single data value, even when some nodes fail or behave unpredictably. This capability is crucial for maintaining consistency, fault tolerance, and coordination in systems like databases, blockchain networks, and cloud computing platforms.

Several consensus algorithms have become staples in the industry such as:

- Raft: Known for its simplicity and ease of implementation, Raft is used in systems like etcd and Consul to provide strong consistency.

- Zab (Zookeeper Atomic Broadcast): Utilised by Apache ZooKeeper, Zab ensures that all nodes see the same sequence of state updates.

- Byzantine Fault Tolerance (BFT): Protocols like PBFT handle faulty or malicious nodes, ensuring consensus even under adversarial conditions.

Paxos is a popular algorithm renowned for its ability to achieve consensus in asynchronous environments. It was designed by Leslie Lamport (you can find his paper "Paxos Made Simple" here) and is valued for its robustness in scenarios where network delays and node failures are common.

Paxos operates by having a set of proposers suggest values to a group of acceptors. Once a majority of acceptors agree on a value, it becomes chosen. Despite its theoretical complexity, Paxos has been implemented in various systems requiring high reliability and fault tolerance.

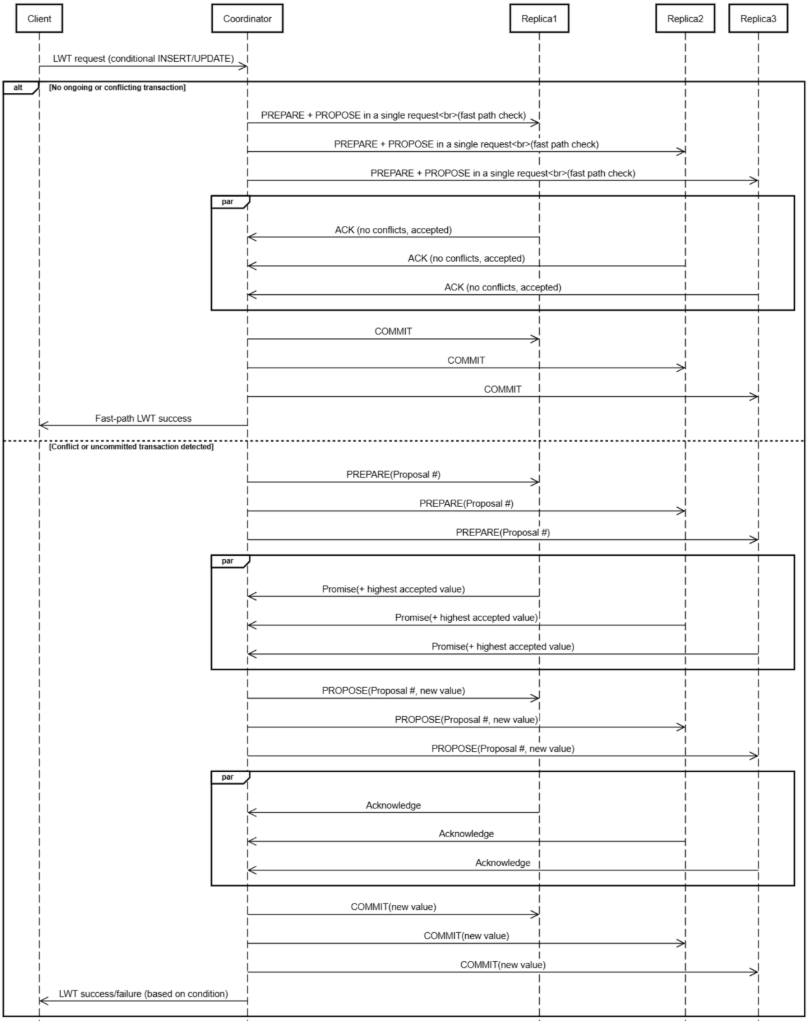

Given Cassandra's own design priorities, which emphasize high availability and fault tolerance in the face of network partitions and node failures, Paxos is a natural fit. By leveraging Paxos, Cassandra's Lightweight Transactions can provide strong consistency guarantees even in the most challenging distributed environments, making it an ideal choice for applications that require both scalability and reliability. The following sequence diagram describes Cassandra’s implementation of the Paxos algorithm.

Paxos v2

The Apache Cassandra project has introduced Paxos v2 as an optional setting in Cassandra 4.1. It is a significant improvement to the original Paxos v1 implementation and focuses on enhancing performance, safety, and operational efficiency. The improvements in Paxos v2 are outlined in the Cassandra Enhancement Proposal (CEP-14) and were implemented as part of the CASSANDRA-17164.

Highlights of v2

- Performance Enhancements: improves the way messages are exchanged between nodes. In certain situations, it cuts the number of messages in half, which is a huge win for multi-node clusters.

- Reduced WAN Communications: the new implementation reduces the number of wide area network (WAN) round-trips needed when compared to v1. This is particularly beneficial for Cassandra clusters with distributed data centres across geographic regions.

- Automatic Paxos Repair Mechanism: comes with a new internal repair mechanism that enhances the effectiveness of LWT operations. Paxos repairs are run automatically on each node every 5 minutes without the need for manually repairing them. Currently, in 4.1 the automatic paxos repairs are always enabled by default. However, if needed it can be disabled in 5.0 and users can choose to run the paxos repair on a manual basis or, using AxonOps, set up a scheduled paxos only repair to run at the interval you choose.

- Commit Consistency Level Requirements: Another difference lies in the commit consistency levels chosen for LWT writes under v2. The commit consistency level should ideally be set to ANY or LOCAL_QUORUM. This is an important change developers must implement to fully leverage Paxos v2 improvements. It minimises the WAN round-trips required for completing transactions, significantly enhancing the responsiveness of LWT requests using v2.

Paxos v2 Implementation

The following sequence diagram describes the Paxos v2 implementation with Cassandra.

Key Differences in Paxos V2 (Cassandra 4.1)

- Combined “Prepare” & “Propose” (Fast Path)

- If no uncommitted transaction or conflict is found, Cassandra can short-circuit the usual multi-phase process.

- The coordinator sends a combined PREPARE + PROPOSE in one round.

- Replicas can immediately accept if they detect no existing proposal or conflict, reducing network round trips from two phases to one.

- Fallback to Multi-Phase on Conflict

- If any replica reports an ongoing proposal, a conflict, or an uncommitted transaction, Cassandra falls back to the classic multi-phase Paxos flow (Prepare -> Propose -> Commit).

- This ensures correctness and linearizability even under contention.

- Ephemeral Data and Tracking

- Cassandra 4.1 may track proposals or partial commits in ephemeral in-memory tables/structures. This helps to quickly detect whether a fast path is possible or if a transaction is in-flight, without always doing a full “read” phase.

- Performance Gains

- Most LWTs in real workloads are uncontended (i.e., no concurrent transactions on the same partition). Paxos V2 significantly speeds up these uncontended cases.

- In the event of contention, the algorithm gracefully reverts to multi-phase Paxos, preserving consistency guarantees.

Overall, Paxos V2 in Cassandra 4.1 preserves the same correctness properties as the classic LWT approach but optimizes away unnecessary phases when there is no contention.

Switching to Paxos v2

Now that you've seen the benefits of Paxos v2, you are probably eager to start using it in your Cassandra cluster. And why not? With its improved performance, reduced latency, and more efficient WAN communications, Paxos v2 is a compelling upgrade for anyone using LWTs.

Switching from Paxos v1 to Paxos v2 in Cassandra 4.1 or 5.0 involves several critical steps and configuration changes to ensure a smooth transition. Here’s a detailed breakdown of the process:

- Set paxos_variant to v2

You need to update the paxos_variant setting to v2 across your cluster. This can be done either via JMX or by modifying the cassandra.yaml configuration file and restarting the Cassandra process. It's essential to ensure that this setting is consistent across all nodes to avoid interoperability issues and if you choose to change this via JMX, make sure to update the cassandra.yaml to make the change persistent.

- Enable and run a paxos repair

It is crucial to run Paxos repairs frequently - either automatically or scheduled. As previously mentioned paxos repairs are run automatically every 5 mins when using v2. Additionally, unlike standard repairs, Paxos repairs are lightweight and can be scheduled more frequently, possibly once every hour, to keep the consensus states correctly synchronised among nodes. This change improves reliability and performance when using LWT. You should either wait for the automatic paxos repairs to have completed or run a paxos repair (see the --paxos-only flag in nodetool or run a paxos repair via the AxonOps repair service)

- Set paxos_state_purging to repaired: After ensuring that automatic or regular Paxos repairs are in place, you should configure paxos_state_purging to be repaired in the cassandra.yaml configuration file. This can also be done via JMX, but make sure to update the cassandra.yaml so the configuration change is persistent. This setting allows the system to automatically purge old Paxos states while maintaining the integrity of ongoing transactions. Note - once this has been set, you can not revert to paxos_state_purging: legacy.

If you need to disable Paxos repairs for an extended period or revert back to v1, you'll also need to rollback your applications' consistency change and set paxos_state_purging to gc_grace (see step 4).

- Update Application Consistency Level: Applications leveraging LWT for write operations should ideally set Commit consistency level to ANY (ideally) or LOCAL_QUORUM. It minimises the WAN round-trips required for completing transactions, significantly enhancing the responsiveness of LWT requests using v2.

- Testing the Upgrade: Before deploying in a production environment, thoroughly test the changes in a staging setup. Ensure the app’s functionality with Paxos v2 behaves as expected, focusing on meeting the consistency requirements and validating system responses. Utilise test cases that involve conditional inserts and batch operations.

- Monitoring and Adjustments: Post-upgrade, monitor the cluster performance closely. Look for any latency issues or unusual behaviour in LWT operations and fine-tune the configurations as required. AxonOps can provide you with all the metrics and monitoring you need to keep a close eye on your cluster and repair it efficiently and effectively.

To ensure a successful transition to Paxos v2, it's essential to follow these steps and don't skip steps 4 or 5! This will help you avoid any potential issues with transaction consistency and reliability and ensure that your system continues to run smoothly. By taking the time to switch carefully, you'll be able to take full advantage of the improvements in Lightweight Transactions.

Why ANY?

One of the notable recommendations for applications is to use a commit consistency level of ANY when using LWT with Paxos v2. This recommendation is primarily driven by the performance enhancements introduced with the new Paxos v2 implementation, which includes significant changes such as Paxos repair and the elimination of Paxos state TTLs (Time-To-Live) in v2.

Understanding Paxos State Management in Cassandra

To appreciate why using a consistency level of ANY is beneficial, it's important to understand how Paxos state management has evolved. In previous versions, the Paxos state was subject to TTLs, meaning that it could expire and be removed after a certain period defined (gc_grace_seconds) of the associated table being updated as part of the LWT.

This posed a risk: if a commit did not reach a quorum or local quorum, there was potential for losing Paxos state metadata before subsequent operations could complete it. Essentially, this required ensuring that the write portion of a Paxos operation reached a quorum of nodes to avoid data loss.

Improvements with Paxos v2

Paxos v2 addresses these concerns by ensuring data integrity without relying on TTLs for Paxos state. The new approach involves retaining old Paxos metadata until it is verified that all operations initiated before the repair's designated time have been completed across all replicas. This change means that even if a write does not immediately reach a quorum, subsequent Paxos operations or the Paxos repair process will ensure that it eventually does. As a result, there is no longer a risk of losing data due to incomplete writes when using LWT with Paxos v2.

Performance Benefits of Consistency Level ANY

Given these improvements, using a consistency level of ANY for commit operations allows applications to benefit from reduced latency and increased performance. Since the responsibility for ensuring data consistency and completion has shifted to subsequent operations or the repair process, applications can commit writes with less overhead and faster response times. This is particularly advantageous in scenarios where immediate consistency across all nodes is not critical and where performance gains are prioritised.

ACID Transactions in Apache Cassandra

While not yet available, as we are looking at LWTs, it's important to know about an exciting new feature coming in Cassandra - ACID transactions using the Accord protocol (you can download the Accord paper here).

The work on integrating Accord is ongoing, with expectations that it will be fully implemented in Cassandra 5.1, and it's a feature everyone in the Cassandra community is very excited about. For more information on its implementation and progress have a look at CEP-15.

The Accord protocol is a new distributed transaction protocol that will bring support for ACID transactions. This development is crucial because it allows Cassandra to handle operations that require atomicity, consistency, isolation, and durability - attributes traditionally associated with relational databases.

Key Features of Accord

- Strict-Serializable Isolation: Accord ensures strict-serializable isolation, meaning transactions appear as if they were executed in some sequential order without interference from other operations.

- One Round Trip Consensus: The protocol aims to achieve consensus in one round trip, which is a significant improvement over existing protocols. This is accomplished through innovative mechanisms such as the reorder buffer and fast-path electorates, which help manage latency and maintain performance even during failures.

- Leaderless Architecture: Accord aligns with Cassandra's leaderless architecture, avoiding the need for complex leader election processes and ensuring fault tolerance and scalability.

Benefits of Implementing Accord

- Enhanced Usecases: With ACID transactions, Cassandra can be used for more demanding applications.

- Improved Developer Experience: Developers can rely on Cassandra for complex transactional operations without resorting to multiple databases or complex code workarounds. This simplifies the development process and enhances data integrity across distributed systems.

The introduction of the Accord protocol into Cassandra represents a major leap forward in enabling ACID transactions within a distributed database. This advancement significantly broadens the scope of applications that can effectively use Cassandra. If you would like to know more about Accord, have a look at this informative talk from Benedict Elliott Smith, one of the project commitors working on this new feature.

Conclusion

In this blog, we've explored the world of Lightweight Transactions in Apache Cassandra, discussing what they are, the use cases they solve, and the significant improvements brought by Paxos v2. While LWTs are not a silver bullet and should still be used only when you really need to, the enhancements in Paxos v2 make them much more performant and reliable. We have also given a brief introduction to the project's upcoming support for ACID transactions via the Accord protocol.

It's essential to remember that LWTs are designed to address specific use cases, such as preventing concurrent overwrites or ensuring unique entries, and should not be used as a default approach for all Cassandra data updates. However, when used correctly, Paxos v2 LWTs can greatly reduce the headaches associated with managing a Cassandra cluster in production when applications are using LWT transactions.

To ensure your Cassandra cluster is healthy, well-monitored, and running smoothly, it's crucial to have the right tools in place. That's where AxonOps comes in. Our comprehensive monitoring and alerting platform provides you with the insights and control you need to optimise your Cassandra cluster's performance, detect potential issues before they become incidents, and simplify your operations. With AxonOps, you can focus on what matters most - delivering exceptional service to your users. So, if you want to take your Cassandra cluster to the next level, try AxonOps today and discover the power of proactive monitoring and management.

About AxonOps

Organizations turn to AxonOps to democratise Apache Cassandra and Kafka skills through best-in-class management tooling, backed by world-class support. Built by experts, our unified monitoring and operations platform for Apache Cassandra and Kafka provides access to all of the capability required to effectively monitor and operate a Cassandra and Kafka environment via the APIs or UI of a single management control plane.

Latest Articles

Stay up-to-date on the Axonops blog

Book time with an AxonOps expert today!